Beyond CUDA: GPU Accelerated Python for Machine Learning on Cross-Vendor Graphics Cards Made Simple | by Alejandro Saucedo | Towards Data Science

Amazon.com: Hands-On GPU Computing with Python: Explore the capabilities of GPUs for solving high performance computational problems: 9781789341072: Bandyopadhyay, Avimanyu: Books

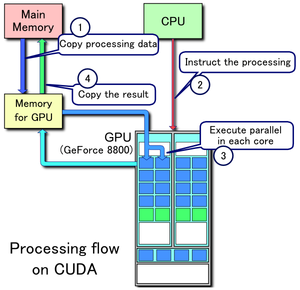

A Complete Introduction to GPU Programming With Practical Examples in CUDA and Python - Cherry Servers

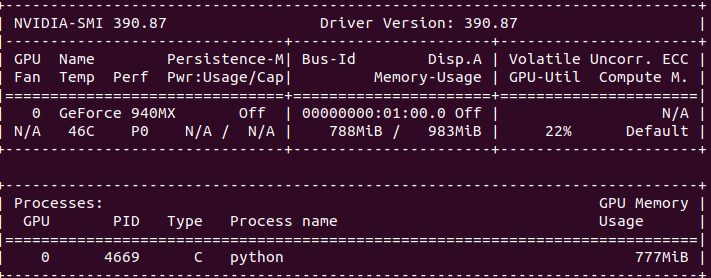

How to dedicate your laptop GPU to TensorFlow only, on Ubuntu 18.04. | by Manu NALEPA | Towards Data Science

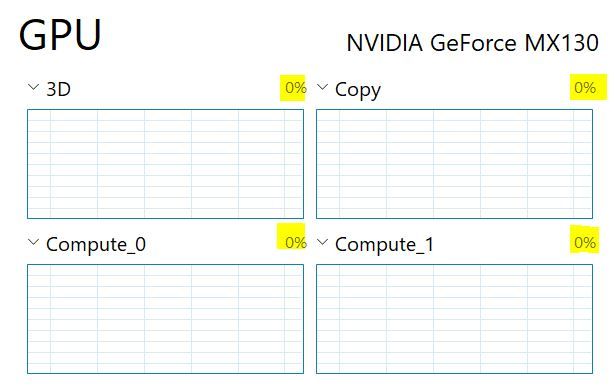

Executing a Python Script on GPU Using CUDA and Numba in Windows 10 | by Nickson Joram | Geek Culture | Medium

Executing a Python Script on GPU Using CUDA and Numba in Windows 10 | by Nickson Joram | Geek Culture | Medium

NVIDIA's Answer: Bringing GPUs to More Than CNNs - Intel's Xeon Cascade Lake vs. NVIDIA Turing: An Analysis in AI